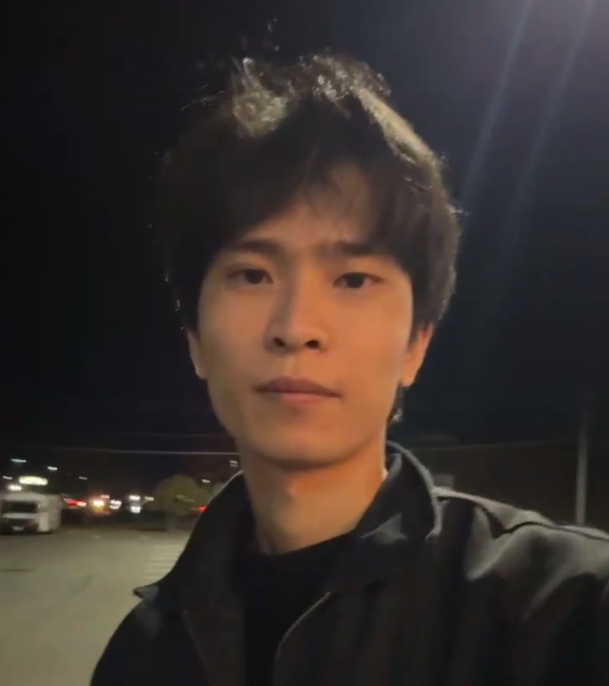

Short Bio

Yuanjie Lu is a PhD student in Computer Science at George Mason University, working in the Robotixx Lab under the supervision of Prof. Xuesu Xiao. He is also affiliated with The Center for Human-AI Innovation in Society. His research lies at the intersection of foundation models and reinforcement learning for autonomous decision-making. He develops LLM/VLM-based reasoning systems, deep RL algorithms, and learning-based planning methods. His work focuses on creating intelligent systems that can adapt to novel environments through learned representations and hierarchical decision-making. He is actively seeking research scientist and ML engineer positions in industry, with interests in foundation models, reinforcement learning, and AI systems.

Collaborations

His research journey has progressed from machine learning and deep learning for autonomous systems, through motion planning algorithms in robotics, to real-world robot navigation and foundation model-driven autonomy.

He first worked with Dr. Amarda Shehu and Dr. David Lattanzi at George Mason University (GMU) on data-driven anomaly forecasting for autonomous systems. He then collaborated with the Virginia Transportation Research Council (VTRC) on graph neural networks for traffic flow forecasting under non-recurring disruptions such as work zones and lane closures.

Subsequently, he joined Dr. Erion Plaku’s lab, where he studied motion planning algorithms that integrate learning with classical planners, building on foundations in dynamic programming and search-based methods. He later collaborated with Prof. Nick Hawes at Oxford University on robot dynamics and adaptive control, Dr. Tinoosh Mohsenin at Johns Hopkins University on quadruped navigation, and Dr. Xiaomin Lin on LLM/VLM-driven robot navigation.

Research Interests

- Foundation Models for Decision-Making: LLMs and VLMs for reasoning, planning, and adaptive parameter learning

- Deep Reinforcement Learning: Policy learning, hierarchical control, and sim-to-real transfer

- Neural Motion Planning: Learning-based methods for efficient planning and control in dynamic environments

Recent Publications & Projects

Adaptive Dynamics Planning (ADP) for Robot Navigation (ICRA 2026)

Combining Motion Planning with TD3-Based Reinforcement Learning for Real-Time Dynamics Adaptation in Mapless, Constrained Environments

Autonomous Navigation for Legged Robots (Summer 2025 @ JHU)

Autonomous Navigation for Legged Robots in Complex Environments

Adaptive Locomotion for Quadruped Robots in Unstructured Terrain (Summer 2024 @ Unitree)

Reinforcement Learning-Based Balance and Mobility Control for Legged Robots Traversing Rocky Terrain and Stairs

News

- [Dec 2025] Built a custom dynamics-model-based navigation system with full ROS1/ROS2 compatibility, supporting modular design and planner adaptation.

- [Oct 2025] Preparing three papers on LLM/VLM-based navigation in collaboration with the University of South Florida and the University of Maryland

- [Sep 2025] Paper “Autonomous Ground Navigation in Highly Constrained Spaces” published in IEEE ICRA 2025 (BARN Challenge Competition Track)

- [Sep 2025] Our paper “Adaptive Dynamics Planning for Robot Navigation” submitted to IEEE ICRA 2026

- [Jul 2025] Our work is supported by Google DeepMind, Clearpath Robotics, Raytheon Technologies, Tangenta, Mason Innovation Exchange (MIX), and Walmart

- [May 2025] Two papers accepted to IEEE IROS 2025: “Decremental Dynamics Planning for Robot Navigation” and “Reward Training Wheels: Adaptive Auxiliary Rewards for Robotics Reinforcement Learning”

- [May 2025] Started Research Engineer position at Johns Hopkins University

- [Mar 2025] Our work is supported by National Science Foundation (NSF), Army Research Office (ARO) and Air Force Research Laboratory (AFRL)

- [Jan 2025] Paper “Multi-goal Motion Memory for Robot Navigation” accepted to IEEE ICRA 2025